Photos: YouTube\Screenshots

“In my day,” began a voice endorsing a laissez-faire approach to police brutality, “no one would bat an eye” if a police officer killed 17 or 18 people. The voice in the video, which purportedly belonged to Chicago mayoral candidate Paul Vallas, went viral just before the city’s four-way primary in February.

It wasn’t a gaffe, a leak, or a hot-mic moment. It seemingly wasn’t even the work of a sly impersonator who had perfected his Paul Vallas impression. The video was a digital fabrication, a likely creation of generative artificial intelligence that was viewed thousands of times.

The episode heralds a new era in elections.

Next year will bring the first national campaign season in which widely accessible AI tools allow users to synthesize audio in anyone’s voice, generate photo-realistic images of anybody doing nearly anything, and power social media bot accounts with near human-level conversational abilities — and do so on a vast scale and with a reduced or negligible investment of money and time. Due to the popularization of chatbots and the search engines they are quickly being absorbed into, it will also be the first election season in which large numbers of voters routinely consume information that is not just curated by AI but is produced by AI.

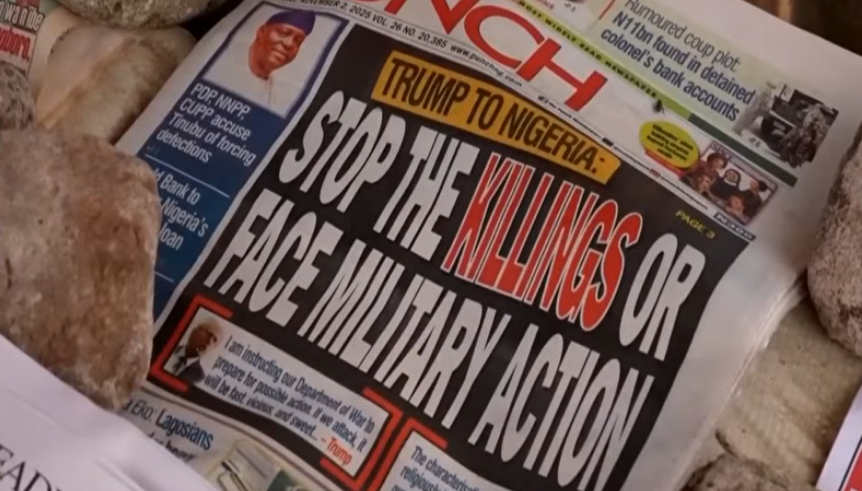

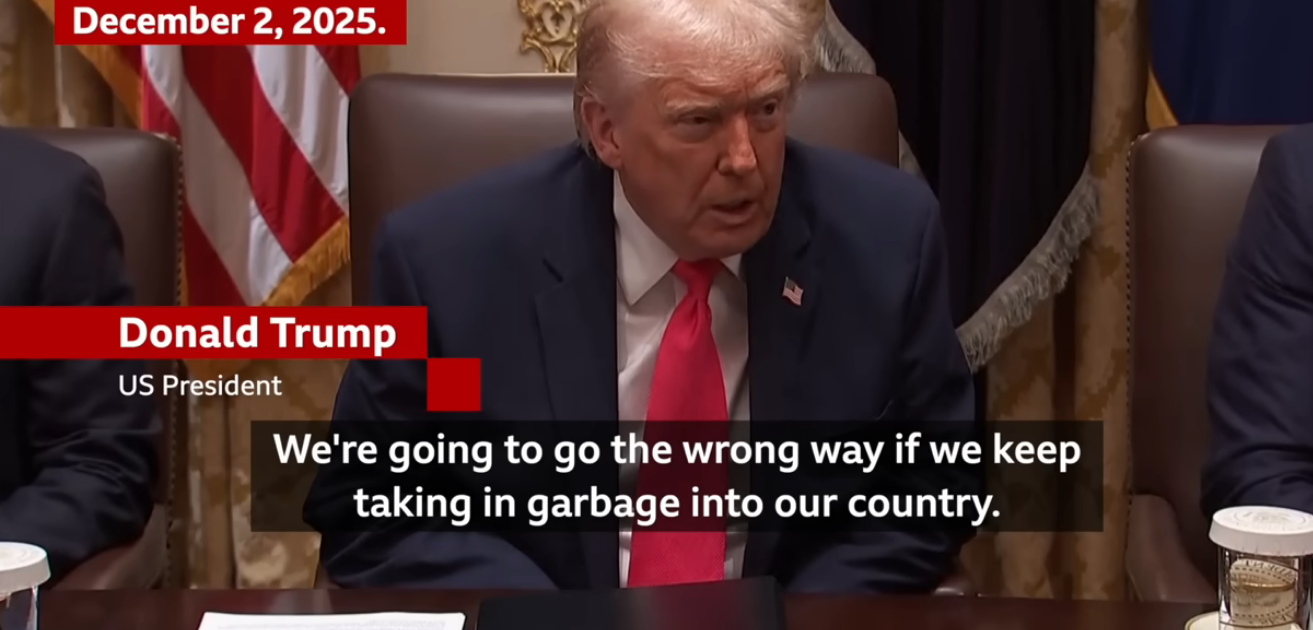

This change is already underway. In April, the Republican National Committee used AI to produce a video warning about potential dystopian crises during a second Biden term. Earlier this year, an AI-generated video showing President Biden declaring a national draft to aid Ukraine’s war effort — originally acknowledged as a deepfake but later stripped of that context — led to a misleading tweet that garnered over 8 million views. A deepfake also circulated depicting Sen. Elizabeth Warren (D-MA) insisting that Republicans should be barred from voting in 2024. In the future, malign actors could deploy generative AI with the intent to suppress votes or circumvent defenses that secure elections.

The AI challenge to elections is not limited to disinformation, or even to deliberate mischief. Many elections offices use algorithmic systems to maintain voter registration databases and verify mail ballot signatures, among other tasks. As with human decisions on these questions, algorithmic decision-making has the potential for racial and other forms of bias. There is growing interest by some officials in using generative AI to aid with voter education, creating opportunities to speed up processes but also producing serious risks for inaccurate and inequitable voter outreach.

AI advances have prompted an abundance of generalized concerns from the public and policymakers, but the impact of AI on the field of elections has received relatively little in-depth scrutiny given the outsize risk. This piece focuses on disinformation risks in 2024. Forthcoming Brennan Center analyses will examine additional areas of risk, including voter suppression, election security, and the use of AI in administering elections.

AI since the 2022 elections

While AI has been able to synthesize photo-quality “deepfake” profile pictures of nonexistent people for several years, it is only in recent months that the technology has progressed to the point where users can conjure lifelike images of nearly anything with a simple text prompt. Adept users have long been able to use Photoshop to edit images, but vast numbers of people can now create convincing images from scratch in a matter of seconds at a very low — or no — cost. Deepfake audio has similarly made enormous strides and can now clone a person’s voice with remarkably little training data.

While forerunners to the wildly popular app ChatGPT have been around for several years, OpenAI’s latest iteration is leaps and bounds beyond its predecessors in both popularity and capability. Apps like ChatGPT are powered by large language models, which are systems for encoding words as collections of numbers that reflect their usage in the vast swaths of the web selected for training the app. The launch of ChatGPT just weeks after the 2022 midterm election on November 30, 2022, has precipitated a new era in which many people regularly converse with AI systems and read content produced by AI.

Since ChatGPT’s debut, our entire information ecosystem has begun to be reshaped. Search engines are incorporating this kind of technology to provide users with information in a more conversational format, and some news sites have been using AI to produce articles more cheaply and quickly, despite the tendency for it to produce misinformation. Smaller (for now) replicas of ChatGPT and its antecedents are not limited to the American tech giants. For instance, China and Russia have their own versions. And researchers have found ways of training small models from the output of large models that perform nearly as well — enabling people around the globe to run custom versions on a personal laptop.

Unique vulnerability to disinformation

Elections are particularly vulnerable to AI-driven disinformation. Generative AI tools are most effective when producing content that bears some resemblance to the content in their training databases. Since the same false narratives crop up repeatedly in U.S. elections — as Brennan Center research and other disinformation scholars have found, election deniers do not reinvent the wheel — there is plenty of past election disinformation in the training data underlying current generative AI tools to render them a potential ticking time bomb for future election disinformation. This includes core deceptions around the security of voting machines and mail voting, as well as misinformation tropes regularly applied to innocent and fast-resolved glitches that occur in most elections. Visual-based misinformation is widely available as well — for example, pictures of discarded mail ballots were used to distort election narratives in both the 2020 and 2022 elections.

Different kinds of AI tools will leave distinct footprints in future elections, threatening democracy in myriad ways. Deepfake images, audio, and video could prompt an uptick in viral moments around faux scandals or artificial glitches, further warping the nation’s civic conversation at election time. By seeding online spaces with millions of posts, malign actors could use language models to create the illusion of political agreement or the false impression of widespread belief in dishonest election narratives. Influence campaigns could deploy tailored chatbots to customize interactions based on voter characteristics, adapting manipulation tactics in real time to increase their persuasive effect. And they could use AI tools to send a wave of deceptive comments from fake “constituents” to election offices, as one researcher who duped Idaho state officials in 2019 using ChatGPT’s predecessor technology showed. Chatbots and deepfake audio could also exacerbate threats to election systems through phishing efforts that are personalized, convincing, and likely more effective than what we’ve seen in the past.

One need not look far to witness the potential for AI to distort the political conversation around the world: A viral deepfake showing Ukrainian President Volodymyr Zelenskyy surrendering to Russia. Pro-China bots sharing videos of AI-generated news anchors — at a sham outfit called “Wolf News” — promoting falsehoods flattering to China’s governing regime and critical of the United States, the first known example of a state-aligned campaign’s deployment of video-generation AI tools to create fictitious people. ChatGPT4 yielding to a request from researchers to write a message for a Soviet-style information campaign suggesting that HIV, the virus that can cause AIDS, was created by the U.S. government. Incidents like these could proliferate in 2024.

Comments are closed.